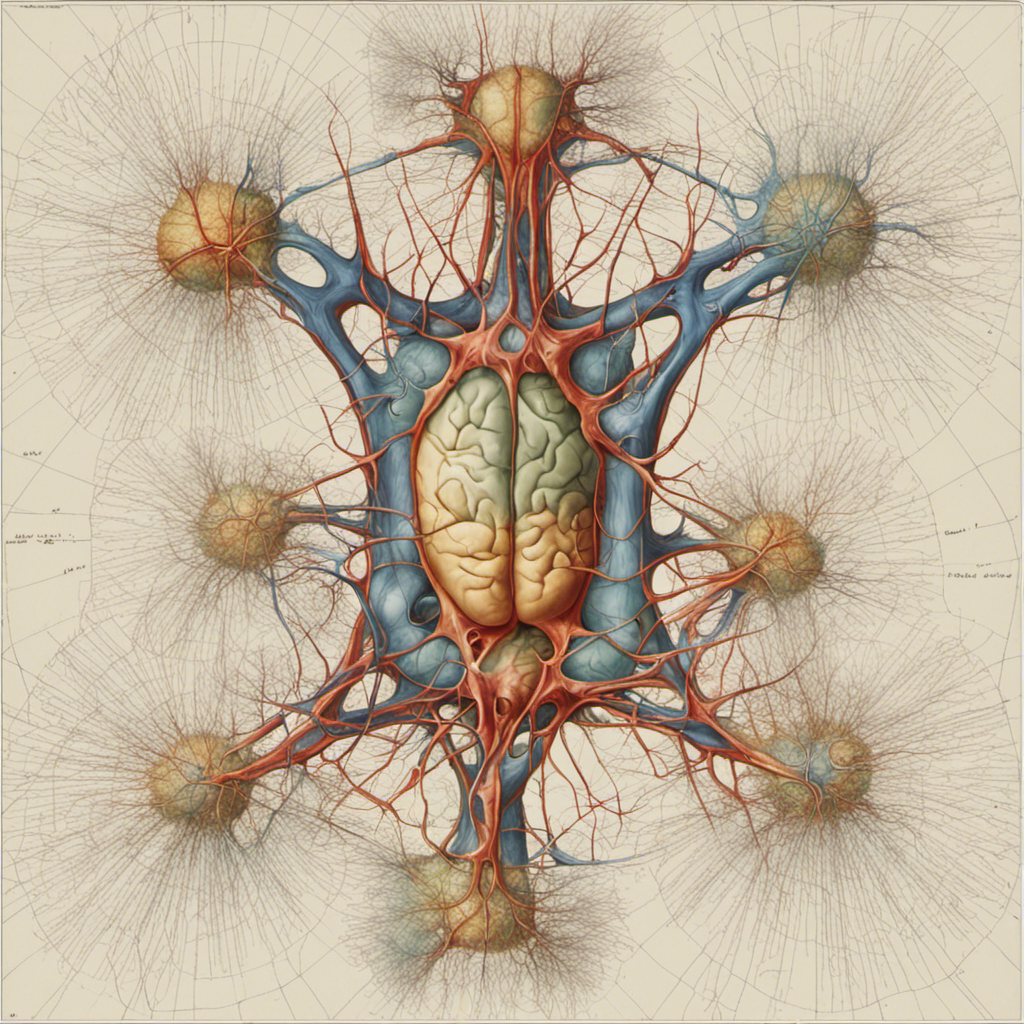

Neural networks have emerged as a powerful machine learning approach for modeling complex functions and tackling difficult real-world problems. Inspired by biological neural networks, they consist of interconnected nodes or artificial neurons that transmit signals between each other. Modern neural networks are organized into layers that hierarchically transform raw input data into increasingly abstract representations through feature extraction. This multilayered architecture enables neural networks to learn sophisticated mappings from high-dimensional inputs to outputs.

Two major classes of neural networks have risen to prominence:

– Discriminative neural networks directly map inputs to target outputs and excel at supervised learning tasks like classification, regression, and pattern recognition. Convolutional neural networks (CNNs) are the state-of-the-art for computer vision applications like image classification.

– Generative neural networks model the probability distribution of data and can synthesize new samples from that distribution. They are employed for unsupervised learning tasks like generating synthetic images, audio, and text. Generative adversarial networks (GANs) are an innovative generative model comprising two competing neural networks that can produce highly realistic synthetic data (Goodfellow et al., 2014).

The flexibility of neural networks stems from their modular, differentiable structure that can be optimized through backpropagation and stochastic gradient descent. This has enabled the development of customized architectures for different domains. For instance, recurrent neural networks (RNNs) leverage temporal connections between nodes for processing sequential data like text and speech. Attention mechanisms allow RNNs to selectively focus on relevant parts of the input (Vaswani et al., 2017).

More recently, transformers based solely on attention have achieved state-of-the-art results in natural language processing tasks. Models like BERT learn bidirectional representations from unlabeled text through self-supervised pretraining on massive corpora (Devlin et al., 2019). In reinforcement learning, deep neural networks have enabled algorithms like AlphaGo and AlphaZero to master complex games through self-play (Silver et al., 2018).

Ongoing research on neural architecture design, optimization techniques, and integration of neuroscience principles continues to push the boundaries of what neural networks can achieve across a diverse range of real-world applications. The flexibility of neural networks to learn hierarchical representations makes them a ubiquitous tool for tackling challenging problems in computer vision, natural language processing, speech recognition, and beyond.

Marvin Minsky’s pioneering work on neural networks provides a conceptual framework for modeling cognition and intelligence which has not really been exploited to its capacity until date. This chapter builds upon Minsky’s foundational ideas to present a version of neural networks that encapsulates key aspects of thinking and reasoning. The approach utilizes a straightforward terminology to describe the processes of acquiring, representing, and utilizing knowledge to enable decentralized decision-making. The neural network architecture generalizes across iterations, remaining applicable as techniques progress. Specifically, it focuses on the core capabilities of encoding perceptions and memories, recognizing patterns, making inferences, and selecting actions. Just as the brain coordinates specialized regions to produce cognition, this model coordinates computational modules to exhibit “intelligent” behavior. While simplified, this conceptual neural network aims to distill the essence of reasoning into an accessible thought framework. The model provides a starting point for understanding and developing artificial intelligence systems that learn, think, and make decisions like humans.

Click buttons below to learn more.

References

[1] Devlin, J., Chang, M., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. NAACL.

[2] Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., … & Bengio, Y. (2014). Generative adversarial nets. NeurIPS.

[3] Silver, D., Hubert, T., Schrittwieser, J., Antonoglou, I., Lai, M., Guez, A., … & Lillicrap, T. (2018). A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science, 362(6419), 1140-1144.

[4] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., … & Polosukhin, I. (2017). Attention is all you need. NeurIPS.