“I hate everything that merely instructs me without augmenting or directly invigorating my activities”

— Goethe

Memory is a critical component of logic, as reasonable statements require memory support to be effective in any context. For example, consider the summation of two variables x and y, where (x+y) = z. If a computer stores the mathematical expression (x+y) = z in memory, it can accept inputs for x and y to calculate the output z. The availability of the summation expression in memory enables this computational process. However, the true facilitating function is the “+” operator, whose utility is accessible from most computer memories. Without pre-recorded utility functions for operators like “+” and “=”, the computer cannot generate the required result.

Memory is an essential component of logical statements, as any expression, mathematical or linguistic, requires access to pre-recorded or “learned” information to be meaningful. In the graphical framework introduced previously, decentralized knowledge is recorded using distributed logic, but a memory slot is still necessary to enable reasonable dynamism in interpreting stimuli or situations. In Minsky’s work (1973), an information retrieval network (IRN) or memory connects inter-frame structures. The IRN enables continuous replacement of frame-systems to better match perceived situations.

In this framework, the information retrieval network (IRN) functions similarly to Minsky’s conception. It provides access to inter-frame systems, supplying frames with terminal assignments and marker conditions that better match evolving perceived reality, enabling dynamism in processing situations (see Figure X). As shown, the IRN coordinates and circulates information between inter-frame structures (an inter-frame structure may contain n different frames). The IRN appears as a distinct entity separate from the frame-systems. However, this notion breaks down when assuming frame-systems already contain collaborative frames, enabling self-enforced coordination where inter-system collaboration produces enlightened results like new viewpoints or action sequences. In this way, the IRN is presumed to mirror the phenomenon of stigmergy or indirect coordination seen in swarm behavior, which is logically derived in the final framework phases of this technical work on artificial general intelligence.

The structural framework developed thus far explains the thinking process to a considerable degree. Decentralized knowledge can be recorded through distributed logic, and the information retrieval network (IRN) or memory enables continuous replacement of frames within frame-systems to match evolving perceived reality. However, the framework currently lacks a technical methodology to make it machine-programmable, as it remains fragmented and requires unification in terms of goals, performance, and action.

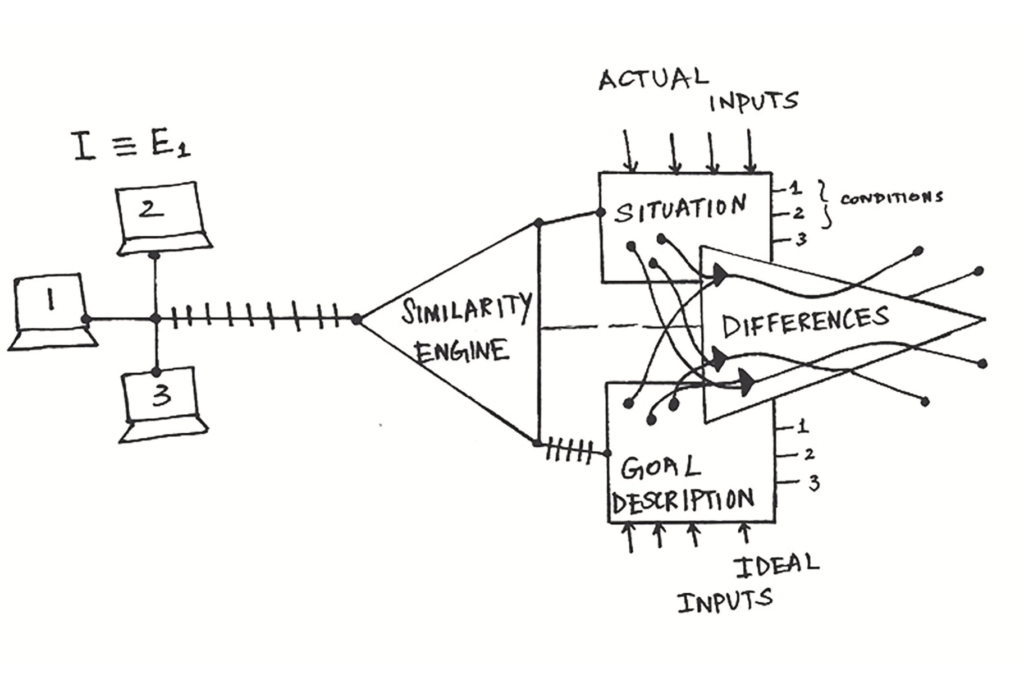

In Minsky’s The Society of Mind (1986), he describes goal-driven machines called “general problem solvers” or difference engines. Minsky postulates the following properties for a difference engine (DE, see Figure Y):

- A DE must contain a description of a “desired” situation.

- It must have subagents that detect differences between the desired and actual situations.

- Each subagent must act to reduce the difference that aroused it.

The described features of a difference engine (DE) offer a method to act based on discrepancies between desired and actual situations. The resulting subagents represent action strategies to “diminish differences” between ideal and real conditions. The DE contains a description of the desired state. At this modeling stage, a similarity-difference engine (SDE) fits into the current framework. The SDE completes the cycle of dynamically programming decentralized knowledge into machines. In context, the similarity engine connects the frame-system with the DE. By selecting an appropriate frame from the system, the similarity engine creates a goal description for the DE. Simply put, based on analyzing the actual situation, the similarity engine helps choose a suitable frame and provides the closest ideal inputs as a goal description to the DE.

The system of frames, the difference engine (DE), and the similarity engine (SE) together comprise a cerebral unit of intelligence (CUI, see Figure Z).

Key features of a CUI include:

- It contains a frame-system, similarity engine, and difference engine

- The frame-system enables perception

- The similarity engine connects the frame-system to the DE by selecting an appropriate frame to provide a goal description to the DE

- The DE generates a goal description and action strategies to reduce discrepancies between actual and desired situations

Frames and frame-systems serve purely analytical functions. A unit of intelligence requires capabilities of perception, analysis, and action. A CUI becomes equivalent to an element E of a miniature swarm (Figure X from the article on swarm intelligence) when following the Perceive, Analyze, Act (PAA) process:

- Perceive: The unit perceives a stimulus/situation and produces corresponding frames and frame-systems

- Analyze: The similarity engine concurrently analyzes the actual situation per the frame-system and the desired situation required by the DE. The desired situation is pre-set in memory or the information retrieval network. Through dual analysis, the similarity engine provides the DE with suitable goal descriptions and ideal inputs.

- Act: The DE formulates action strategies based on the goal description.

Minsky’s models presume minds comprise interacting agencies. As noted earlier, brains resemble swarm systems of indirectly coordinating neurons. The CUI proposes a neural architecture enabling analytical components to strategically interact, logically representing individual cognitive elements. These neural prototypes will later construct the paradigm of sentience for pure mathematical analysis. In summary, the CUI integrates perceptual, analytical, and action capabilities through structured component interactions, providing a strategic neural architecture to model cognition and sentience.

References

[1] Minsky, M. (1973). A framework for representing knowledge. In P. Winston (Ed.), The Psychology of Computer Vision (pp. 1-81). McGraw-Hill.

[2] Minsky, M. (1986). The Society of Mind. A Touchstone Book – Simon & Schuster.