“Minds are simply what brains do.”

“A frame is a data structure for representing a stereotyped situation.”

— Marvin Lee Minsky

Marvin Minsky, co-founder of the MIT AI Lab and an early pioneer of artificial intelligence, advocates for non-hierarchical, decentralized programs to develop intelligent machines. In his seminal 1973 paper “A Framework for Representing Knowledge,” Minsky proposes a “partial theory of thinking” whereby cognitive elements select specific mental “frameworks” to interpret situations by modifying details as needed. Using precise terminology, he decomposes thinking into modular “fragments” for visual, linguistic, and other domains. This fragmentation aligns with concepts like syllogism and transitivity. In “The Society of Mind” (1986), Minsky posits that complex mental phenomena emerge from combinations of simpler components. Despite extensive neuroscience research on brain biology and electrical activity, the specific mechanisms of human thought remain largely unexplained, with both Eastern mysticism and Western science unable to comprehensively elucidate the rapid, adaptive processes of cognition. As noted previously, brains resemble swarm systems of indirectly coordinating neurons. Similarly, Minsky theorizes the mind comprises interacting “agencies” that yield fast-changing “processes.” This article attempts to integrate Minsky’s concepts into a unified framework, adopting his terminology from the 1973 paper to generally characterize mental phenomena in response to realistic stimuli.

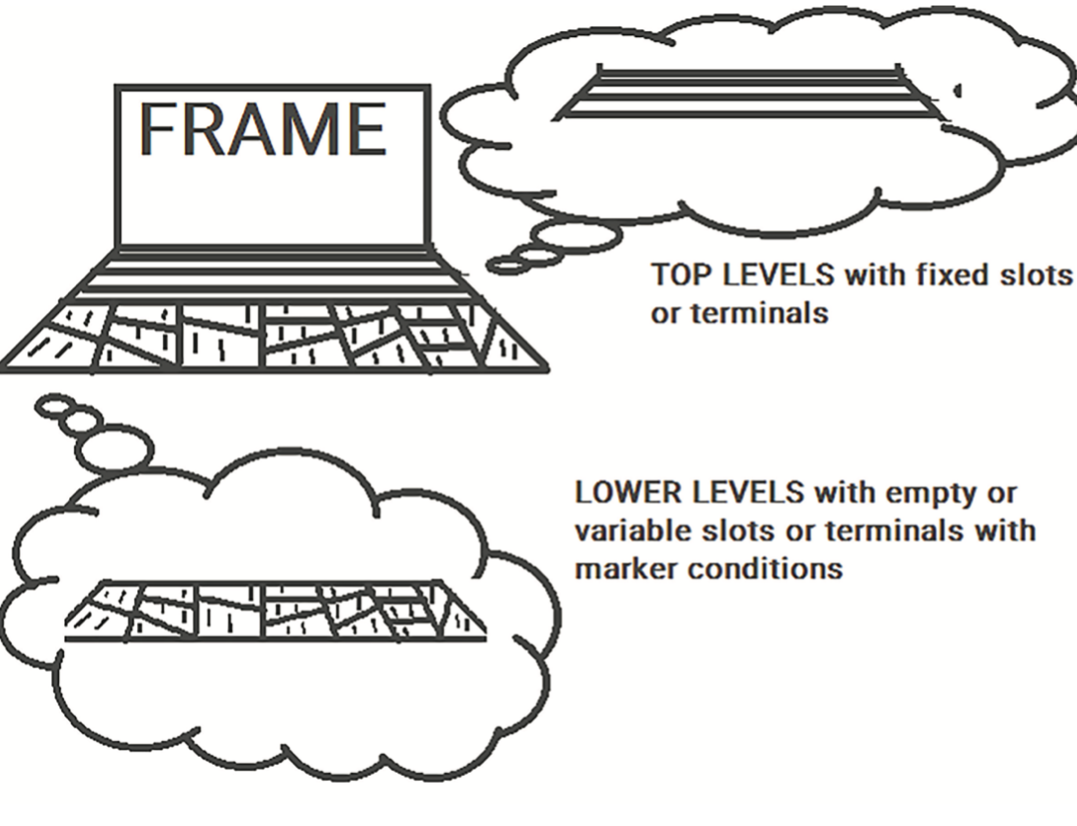

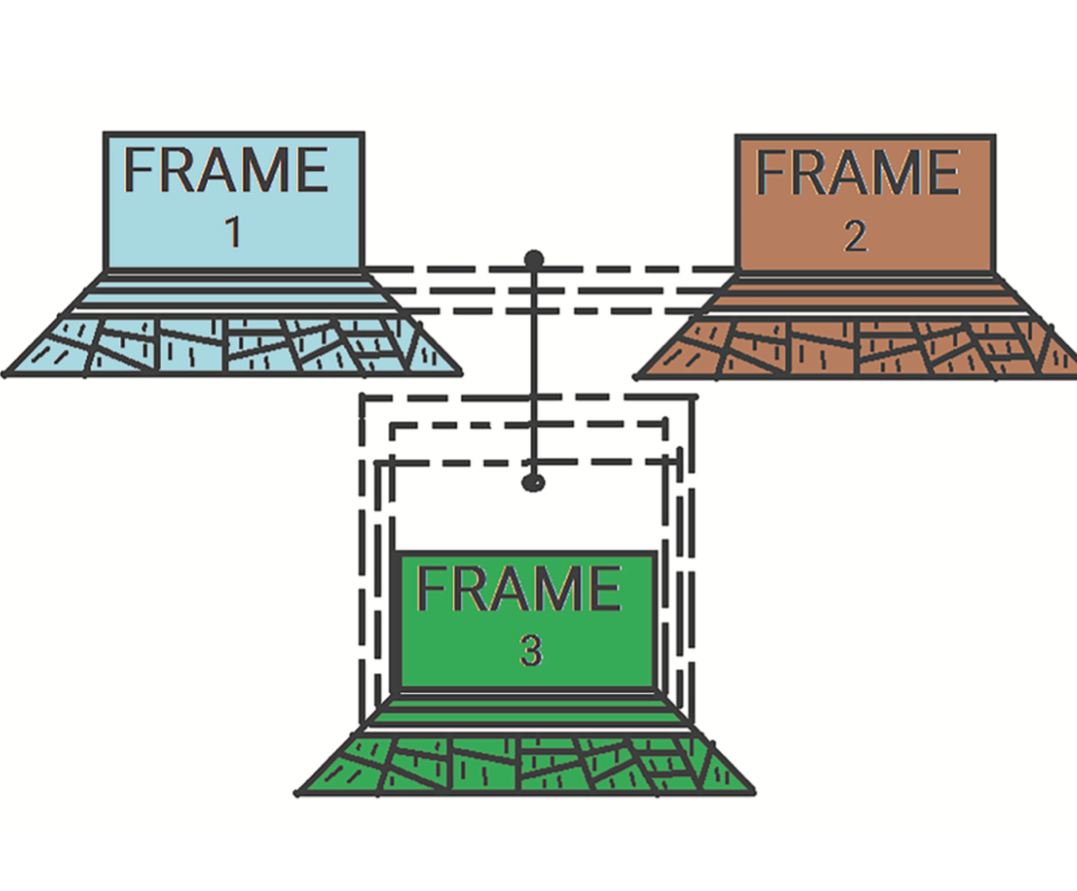

Minsky’s theory of frames describes knowledge representation in the mind. A frame contains fixed “top-level” information that is always true about a concept, and “lower-level” slots that can be filled in with specific details. The lower levels have terminals consisting of assignments and marker conditions. Assignments fill in the slots and provide specific knowledge or data about the concept. Marker conditions denote pointers that retain some essence of a stimulus or situation. Complex marker conditions can reflect complicated characteristics. Default assignments provide general details that allow flexibility in framing concepts. Frames are connected in frame-systems to represent different perspectives on a stimulus. For example, a visual scene analysis frame-system contains multiple frames with different 3D viewpoints of an object. Overall, frames represent concepts with fixed and flexible components that can be tailored to specific instances and linked together to form an integrated knowledge representation. See Figure X and Y.

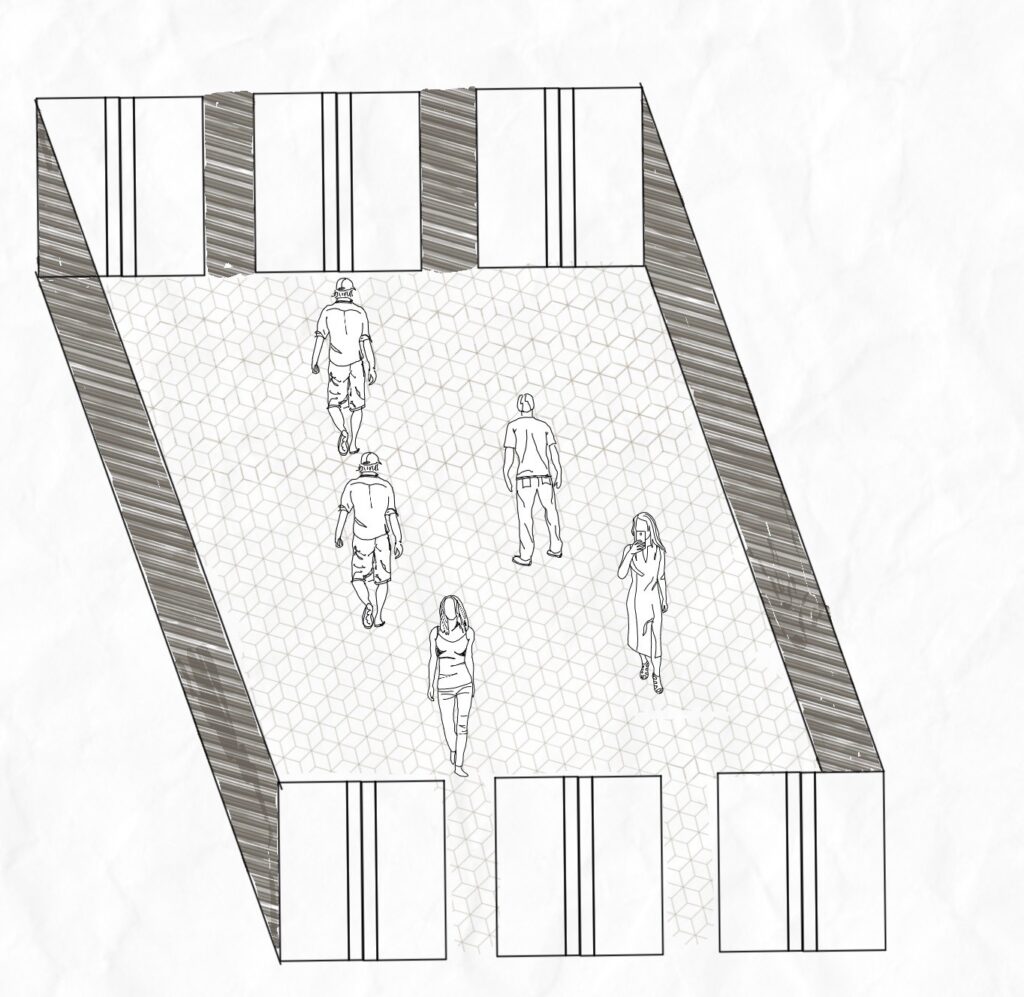

The scenario described involves students utilizing elevators in a university library to travel between floors (see Figure Z). The library has 14 levels, with 6 elevator doors on each level (3 on either side of the cuboidal structure). When students call an elevator from a given level, the optimal elevator responds with a signal (light and sound) and travels to that level, arriving on either side. Two notable situations arise. First, upon entering an elevator, students frequently lose awareness of its spatial orientation relative to the floor layout. This lapse is evidenced by students pausing upon reaching their destination, unclear which direction to proceed towards their intended location. Second, groups exiting tend to blindly follow the students in front without independent spatial reasoning. This example illustrates potential applications of frame theory concepts. The spatial positioning of elevators on each cuboidal level relative to the building could represent a system of frames. Such a programmed system with embedded perceptual data provides contextual knowledge analogous to the human mind in this scenario. A robotic humanoid with default assignments could mimic the students’ bypassing of logic, blindly following the crowd’s actions when default assignments for known situations are triggered. Overall, frame theory offers a model for knowledge representation that can encode fixed truths, flexible details, interlinked perspectives, and bypass logic given the appropriate programming. The elevator example demonstrates how this system could replicate flawed human spatial reasoning and blind adherence to group behaviors.

Definitions:

- Frame: A network of interconnected nodes and relations that represents a concept.

- Top-levels: Fixed components of a frame containing information that is consistently true about the concept.

- Lower-levels: Flexible components of a frame with slots that can be filled with specific details about the concept.

- Terminal assignments: Sub-frames embedded within the lower levels that provide detailed knowledge or data about the concept.

- Marker conditions: Pointers within the lower levels that retain some essence of a stimulus or situation related to the concept. Complex marker conditions denote complicated characteristics.

- Default assignments: General information within the lower levels that allows flexibility in applying the frame to varying concepts.

- Frame-system: A set of associated frames connected by shared terminals to represent different perspectives on a stimulus or situation.

Characteristics:

- Transformations between frames in a system parallel the effects of actions by shifting conceptual attention or viewpoint. In visual scene analysis, transformations mimic viewpoint changes. In non-visual frames, they may represent conceptual or causal shifts.

- Frames in a system coordinate information through shared terminal linkages.

- Frames and frame-systems function as analytical tools to examine a stimulus or situation relative to predefined perceptual imprints derived from prior experience.

Application of frame-theory:

The properties of frames and frame-systems can be leveraged for programming applications. For modeling evolutionary strategies that improve over time, a basic preprint frame is initially applied with top-levels, marker conditions, terminal assignments, and default assignments to represent a potential situation or stimulus. The default assignments incorporate dynamic strategies that allow minute changes to the perceived stimulus to be accepted, improving the defaults and storing previous data instances in an information retrieval network (IRN) or memory. Through repeated experiences of a situation with minor variations, the accumulated memory and real-time state information can enable analytical perception. Similarly, a molded frame-system generates holistic perception via interplay between the multiple dynamic viewpoints of each frame. As Minsky describes, “the inter-frame structures make possible other ways to represent knowledge about facts, analogies, and other information useful for understanding” a stimulus.

In summary, key aspects include:

- Frame properties can be modeled to suit programming needs

- Evolutionary strategies utilize basic preprint frames that accept incremental improvements through dynamic defaults

- Memory and real-time state store changes to enable analytical perception over repeated experiences

- Frame-systems produce holistic perception via viewpoint interplay between frames

- Inter-frame structures represent knowledge for understanding stimuli

The described framework utilizes Minsky’s concepts to enable decentralized knowledge recording through distributed logic. As outlined in Minsky’s article “A Framework for Representing Knowledge” (Minsky, 1973), various models or “paradigms” employ shared terminology like frames, frame-systems, terminal assignments, marker conditions, information retrieval networks, and default assignments. This framework integrates Minsky’s notions into a unified structure capable of incorporating diverse information. Readers are encouraged to study Minsky’s original prototypes to gain personal comprehension of his ideas leveraged here. However, assimilating Minsky’s content is not mandatory for understanding the subsequent mathematical architecture. The next chapter examines the role of memory (or information retrieval networks) and neural engines to propose a consistent neural architecture centered on a fundamental unit of intelligence termed the cerebral unit of intelligence (CUI). The CUI facilitates modeling analytical units that can represent cognitive elements in swarm interactions.

References

[1] Minsky, M. (1973). A framework for representing knowledge. In P. Winston (Ed.), The Psychology of Computer Vision (pp. 1-81). McGraw-Hill.

[2] Minsky, M. (1986). The Society of Mind. A Touchstone Book – Simon & Schuster.